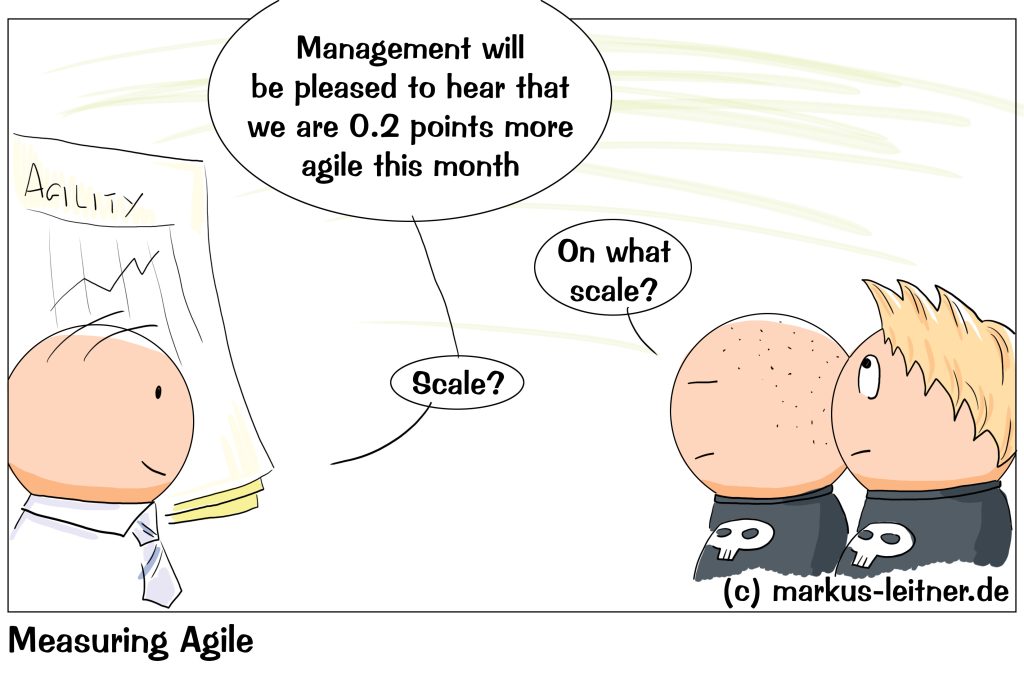

After we have – more or less successfully – introduced agility in our organizational unit, sooner or later we also want to know what has changed as a result and whether our efforts have been worthwhile. We also want to know whether the measures from our retrospectives have brought about any change. In short: we want to find out what is changing and how it is changing.

That actually applies to everything we do in our corporate context, or doesn’t it?

What is the point of making a change and then not checking to see if that change is having the desired effect? Of course, this does not only refer to the agile world. Every measure in organizational development is driven by some kind of motivation. We want to change something because we hope it will improve things.

This is where our problem generally begins, because we are not specific enough. The development speed is too slow, so we come up with a measure to speed things up, but what criteria do we use to know that we have a problem? Are we talking about a gut feeling or about quantifiable data?

We like to do a retrospective or a lessons-learned session at the end of a project or any similar event to collect topics that we think did not went particularly well and where we see room for improvement. Then we might take a look at the status quo, what the processes look like at the moment, and which steps can perhaps be accelerated or left out – so far so good.

Or not.

The reason for this discussion and the search for a solution is usually a feeling. When are we going to talk about numbers there? For example, we say that our work results have been staying in testing for too long and we ask ourselves how we can speed it up. But how long is our stuff staying in testing? Did we measure that? If yes, what is the average value? Do we think the average is okay, and there are only a few isolated outliers? Or is the average too high? Where should it be? These are the key questions.

Every action we take has to be quantifiable in some way, otherwise we are just talking about a feeling. In the testing example, we ask what value we would like to have. At the end of the day, this is how we measure the success of our action. If we have achieved our desired value, our measure was successful. If we do not reach it, let us dig deeper: is our desire even realistic (a question we should be asking much earlier)? How much have we achieved? 60% of what we wanted? Is that enough for us? What else can we do to reach 100%?

The moment we start talking about numbers – already with our motivation – we becme much more concrete and, above all, say goodbye to feelings and impressions.

A much more effective approach in our retrospectives is therefore the following:

We collect things that we think do not work particularly well. In this step we are still with impressions and feelings, but that is also perfectly fine. We have to start somewhere. But right in the next step we ask the question about the values. So in the testing example, we immediately ask how long things have been staying in testing? Is that waiting time or processing time. Did we measure these times? If no: how can we measure it. That would be the very first step: we find a way to measure the waiting time, processing time and throughput time in testing. We then evaluate these numbers. Only then do we have an actually reliable current status. We now compare this with a desired value and identify our measures.

This may seem terribly complicated and tedious to all of you, and you are not entirely wrong. In this example, we would go through two iterations because we first collect the data and only then identify and implement measures. It may be much easier to implement a measure directly, but we must be aware that the verifiability then largely falls by the wayside. I will then only ever be able to work with impressions.

This is the dilemma we find ourselves in, and where we have to decide every time whether we want to go the long way, which is cleaner and gives us reliable numbers, or whether we want to do things quick and dirty, because it is just faster.

My recommendation goes in the direction that you can definitely go the fast way with many small things. We think about something and after a while we ask everyone involved if things have improved. If the result is unanimously positive, we are satisfied and leave it at that.

However, as soon as we find ourselves in a more complex problem or we are moving at a scaled level of organization, we should choose the path of quantifiability. We are going to have to have a lot of discussions, and sooner or later someone we need will ask us the question, what tells us that things aren’t going well at the moment. If we can then present figures that are also understandable, any further discussion becomes much easier.

And as soon as we move on a scaled level, at some point someone will quite rightly ask about the success of our measures. How do we want to answer that? Got better? We then simply have to present numbers. Average throughput times reduced by x percent, costs reduced by y percent. Our counterpart will expect that, and we would do well to deliver exactly that. Not only because it allows us to avoid having someone wring our necks, but also because it is the only way to determine the success of our actions. What is the value now? Where do we want to go? How do we achieve this? When do we check whether we have reached the desired value? Everything else is impression and feeling, and we always make life very difficult for ourselves in a scaled context.

Core: the success of a measure can only be measured using quantifiable parameters, everything else is an impression and a feeling. With small team-internal measures from a retrospective, we can leave it at that without working with numerical values if we ask the team after a certain time whether the desired effect has set in. If we are dealing with a more complex problem or at a scaled organizational level, we should always work with numerical values.

If you need any assistance or want to know more, just speak to me.